How do they create tokens?

- Starts with crawling data from the internet to build a massive dataset

- Filter data with classifier to removing part of the data that low performance (noisy, duplicate content, low-quality text, and irrelevant information)

- Cleaned data will be compressed into something the machine usable

So instead of feeding a raw text into model, it converted into tokens

Tokenization

Example: FineWeb-Edu dataset1

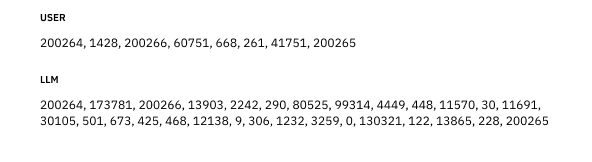

- With the cleaned dataset, the tokenizer firstly will breaking down the raw text into smaller pieces (also tokens). These can be words, parts of words, or punctuation,... depending on the tokenizer

- The tokens will be assigned a unique ID, commonly a number. For example, the word cat might be one token, the word running could split into run and ning

- Tokenization reduces raw text to numbers that a language model can process, so when a model usually introduced with 15 trillion tokens that means 15 trillion of these units were created after cleaning and filtering.

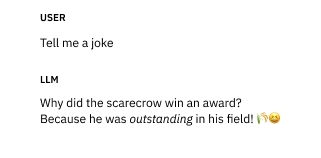

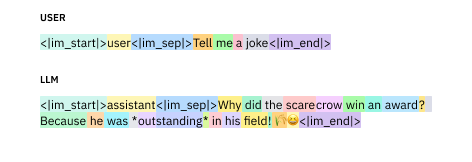

The chat

Note: Did you notice asterisks (*) is recognized as 2 tokens? The first one have a space before.

The mess

Some tokenizer will applied Byte Pair Encoding (BPE, or digram coding)2 to help model more generalized across the messy dataset.

Starts with individual tokens, it will repeatedly find most frequent pair of token in the raw data, and merges them into a single new token.

For example: "you" and "are" in dataset obviously appear together a lot, the BPE algorithm might create "you are" as one token instead of two.

This method can reduces the number of tokens, speeding up training and inference.

References

- Deep Dive into LLMs like ChatGPT by Andrej Karpathy

- 🍷 FineWeb: decanting the web for the finest text data at scale